Use Case 1

Detection of Possible Brute Force Attack

With the evolution of faster

and more efficient password cracking tools, brute force attacks are on a high

against the services of an organization. As a best practice, every organization

should configure logging practices for security events such as invalid number

of login attempts, any modification to system files, etc., so that any possible

attack underway will get noticed and treated before the attack succeeds.

Organizations generally apply these security policies via a Group Policy Object

(GPO) to all the hosts in their network.

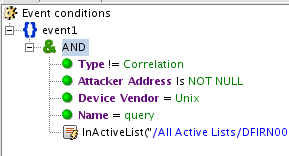

To check for brute force

pattern, I have enabled auditing on logon events in the Local Security Policy

and I will be feeding my System Win:Security logs to Splunk to check for a

brute force pattern against local login attempts.

Note: EventCode: 4625 is used

in new versions of the Windows family like Win 7. In older versions, the event

code for invalid login attempts is 675, 529.

After this, I log off my

machine, and entered the password incorrectly three times in attempt to impersonate

a brute force attack.

Since these activities gets

logged in Win:Security, which in turn is feeding Splunk in real time, an alert

will be created in Splunk, giving analysts an incident to investigate and take

responsive actions, like changing the firewall policy to blacklist that IP.

Use Case 2

Detection of Insider Threat

Reportedly,

more than 30 percent of attacks are from malicious insiders in any

organization. Therefore, every organization must keep the same level of

security policies for insiders also.

Acceptable

Use Monitoring (AUP)

Acceptable Use Monitoring

covers a basic questions, i.e. what resource is being accessed by whom and

when. Organizations generally publish policies for users to understand how they

can use the organization’s resources in the best way. Organizations should

develop a baseline document to set up threshold limits, critical resources

information, user roles, and policies, and use that baseline document to

monitor user activity, even after business hours, with the help of the SIEM

solution.

For example, the below

illustration is of logging a user activity on an object. For demonstrative

purposes, I have created a file named “Test_Access” on my system. Auditing on

object access is enabled in my system, like below in the Local Security Policy.

Enabling auditing on security

policies is not enough, and now I have to enable the auditing on the respective

file, also named “Test_Access” in this case. I have enabled auditing for Group

Name –”Everyone” on this file. Organizations should fingerprint all the

sensitive files and corresponding privileges and user group access on them.

For demonstrative purposes, I

have selected all the object properties to be audited.

After this, I accessed the

“Test_Access” file, which generates an event in Security logs with Event ID

4663, giving user name, action performed, time it was accessed, etc. This

useful information can be fed into the SIEM solution through security logs to

detect any unauthorized or suspicious object access.

Organizations should develop

fingerprints on all the sensitive documents, files and folders, and feed all

this information to respective security solutions such as data leakage

prevention solutions, application logs, WAF, etc. into the SIEM solution to

detect a potential insider threat. Organizations can develop the below use

cases in the SIEM solution under AUP.

· Top malicious DNS requests from user.

· Incidents from users reported at DLP, spam filtering, web

proxy, etc.

· Transmission of sensitive data in plain text.

· 3rd party

users network resource access.

· Resource access outside business hours.

· Sensitive resource access failure by user.

· Privileged user access by resource criticality, access

failure, etc.

Use Case 3

Application Defense Check

Besides network, perimeter,

and end point security, organizations must develop security measures to protect

applications. With attacks like SQL injection, Cross site scripting (XSS),

Buffer overflow, and insecure direct object references, organizations have

adopted security measures like secure coding practices, use of Web Application

Firewall (WAF) which can inspect traffic at layer 7 (Application layer) against

a signature, pattern based rules, etc. Along with the log of applications,

organizations must also feed SIEM with logs of technologies such as WAF, which

can correlate among various security incidents to detect a potential web

application attack. One of the very important points to check for in a

sensitive application is that the application should encrypt the sensitive

information like PII in the logs as well, as these logs will be fed into SIEM,

and if unencrypted, sensitive information could be exposed in SIEM.

Organizations must also

develop a strategy to secure the operating system (OS) platform onto which the

application is hosted. OS as well as application performance logging features

must also be enabled. Below are some of the use cases that can be implemented

in SIEM to check Application defense.

· Top Web application Attacks per server.

· Malicious SQL commands issued by administrator.

· Applications suspicious performance indicator, resource

utilization vector.

· Application Platform (OS) patch-related status.

· Web attacks post configuration changed on applications.

Use Case 4

Suspicious Behavior of Log Source

Expected Host/Log Source Not Reporting

Log sources are the feeds for

any SIEM solution. Most of the SIEM solution these days comes with an

agent-manager deployment model, which means that on all the log sources, light

weight SIEM agent software is installed to collect logs and pass them to a

manager for analysis. An attacker, after gaining control over a compromised

machine/account, tends to stop all such agent services, so that their

unauthorized and illegitimate behavior goes unnoticed.

To counter such malformed

actions, SIEM should be configured to raise an alert if a host stops forwarding

logs after a threshold limit. For example, the below search query (SPL) in

Splunk will raise an alert if a host has not forwarded the logs for more than

one hour.

As soon as an alert is

received with the IP address of the machin under attack, the Incident Response

Team (IRT) can start mitigating this issue.

Unexpected Events Per Second (EPS) from Log Sources

Another common pattern found

among compromised log sources is that attackers tends to change the

configuration files of endpoint agents installed and forward a lot of

irrelevant files to the SIEM manager, causing a bandwidth choke between the

endpoint agent and manager. This affects the performance of real time searches

configured, storage capacity of underlying index for storing logs, etc.

Organizations must develop a use case to handle this suspicious behavior of log

sources. For example, below is the search (SPL) created in Splunk which can

detect unusual forwarding of events from log sources in one day.

An alert will be configured with it to

get triggered whenever the amount of EPS from a log source exceeds a threshold

value for the IRT team to investigate.

Use Case 5

Malware

Check

These days, organizations

believe in protecting their network end to end, i.e. right from their network

perimeter with devices like firewall, Network Intrusion Prevention System

(NIPS), till the endpoints hosts with security features like antivirus and Host

Intrusion Prevention System (HIPS), but most organizations collect reports of

security incidents from these security products in a standalone mode, which

brings problem like false positives, etc.

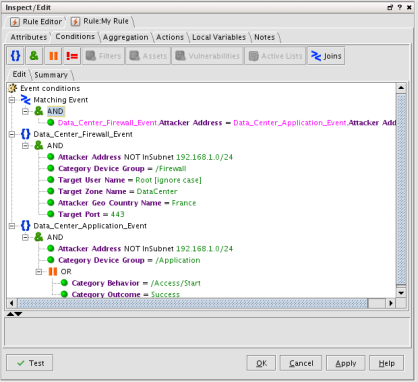

Correlation logic is the

backbone of every SIEM solution, and correlation is more effective when it is

built over the output from disparate log sources. For example, an organization

can correlate various security events like unusual port activities in firewall,

suspicious DNS requests, warnings from Web Application firewall and IDS/IPS,

threats recognized from antivirus, HIPS, etc. to detect a potential threat.

Organizations can make following sub-use case under this category.

· Unusual network traffic spikes to and from sources.

· Endpoints with maximum number of malware threats.

· Top trends of malware observed; detected, prevented,

mitigated.

· Brute force pattern check on Bastion host.

Use Case 6

Detection of Anomalous Ports, Services and Unpatched

Hosts/Network Devices

Hosts or network devices

usually get exploited because they often left unhardened, unpatched.

Organizations first must develop a baseline hardening guideline that includes

rules for all required ports and services rules as per business needs, in

addition to best practices like “default deny-all”.

For example, to check for the

services being started, systems logs from event-viewer must be fed into the

SIEM solution, and a corresponding correlation search must be created against

the source name of “Service Control Manager” to detect what anomalous services

got started or stopped.

Organizations can also check

out for vulnerable ports. Services can be exposed by deploying a vulnerability

manager and running a regular scan on the network. The report can be fed into

the SIEM solution to get a more comprehensive report encompassing risk rate of

the machines in the network. Some use cases that an organization can build from

reports are:

· Top vulnerabilities detected in network.

· Most vulnerable hosts in the network with highest

vulnerabilities.

Another important aspect that

an organization should constantly monitor as part of the SIEM process is that

all clients or endpoints are properly patched with software updates and feed

the client patch status information into the SIEM solution. There are various

ways an organization can plan out for this check.

· Organizations can plan out to check the patch–related

status by deploying a Vulnerability Manager and running a regular scan to check

out for unpatched endpoints.

· Organizations can deploy a “centralized update manager”

like WSUS and feed the results of the updated status of endpoints into the SIEM

solution or can feed the logs of the manager endpoint deployed on endpoints

directly into SIEM to detect all unpatched endpoints in the network.

CONCLUSION

Above use-cases are not a

comprehensive SIEM security check list, but in order to have success with SIEM,

the above listed use cases must be implemented at the minimum on every

organization’s check list.